In-Depth Guide

This is a comprehensive in depth guide for Copilots. If you’re just getting started, check out Getting Started With AI Copilots.

Credal’s AI Copilots empower users to set up dedicated assistants for a wide range of use cases, from customer support to contract review.

Copilots can assist with any task that combines AI and data. They are designed to be experts on the data and context you provide. They combine AI with your data to provide accurate, context-aware responses, while citing their sources.

Contents:

1. Setting up a Copilot

It’s helpful to have a good sense of what you want your copilot to do from the start, so you can set it up to achieve its objectives.

To get started:

- On your left sidebar, Select “Copilots”:

- Select the “Create new copilot” button.

- Give your copilot a name and add a description of its function in the pop-out form. You can always come back and edit these later.

- Then select “Create Copilot.”

2. Configuring a Copilot

Now your copilot is set up, Credal will automatically direct you to the configure page, where you can tune your copilot for your use case. You can return to this page to edit your settings at any time. Each section of the configuration tab is explained below:

a. Name and description

The name and description of your copilot will already be populated and can be edited here.

b. AI Model

- Model Selection

Here you can choose the foundation AI model you want your copilot to use from the drop-down menu.

More advanced models, like o1, are both more expensive and more capable than earlier models. When selecting a model, you may want to consider your budget, the volume of queries you expect the copilot to receive, and the complexity of the task.

GPT-4o is a good starting point for most use cases, as it’s very capable and relatively affordable. If you are unsure of the best choice, running some test queries is a good way to gauge a model’s performance for your use case.

- Creativity

You can set the level of creativity you want your copilot to have by making a selection from the panel below. For most use cases, you’ll want “precise” or “balanced”. More creativity means the copilot will try more unusual responses, while more precision means the copilot will rely more on the context and data you provide. The creativity setting is a bit like having an “eccentric genius” on the team: a lot of what is says may be off, but it’ll occasionally produce something really unique and interesting: most useful for marketing, brainstorming, or creative writing use cases.

c. Prompt

Here you can provide a background prompt, which will provide the copilot with context and instructions on how to handle user queries. By default, Credal instructs copilots to be helpful, honest, and to the point and to let users know when it is unsure of the answer.

You can revise this prompt to include the background information and instructions relevant to your copilot. When thinking about the level of detail you should provide, consider how you might explain the copilot’s assignment to a new employee. Your explanation might include:

- background information on the company;

- the intended users;

- the topics the copilot will be dealing with;

- any relevant guidelines or policies; and

- specific instructions on how to format or word a response.

The content and depth of prompts will vary by use case.

Example Prompt 1: Federal Rules of Court Copilot

You are a diligent, objective, detail-oriented, assistant. Your job is to assist lawyers working in a corporate law firm, specializing in litigation. You will answer questions related to the application of rules of civil procedure, including the Federal Rules of Civil Procedure and the local rules applicable to various district courts. You will respond to questions and prompts truthfully.

Instructions:

- Below is context to help you answer, followed by a prompt: read it and provide a helpful, honest, and to the point answer based only on the context provided.

- If you’re unsure of an answer, you can say “I don’t know” or “I’m not sure”.

- When providing a response, always include a reference to the rule number which contains the answer, using the format “1.A.iii”

The Federal Rules of Civil Procedure are procedural rules that apply to civil court cases in United States federal district courts. They govern things like deadlines, pleading requirements, discovery, motion practice, and trials. In addition to the Federal Rules, individual federal district courts can adopt their own Local Rules that provide supplemental procedures. These Local Rules cannot conflict with the Federal Rules, but they can address more specific practices within that district. In a given court district, the Federal Rules and the local rules for that district are applicable, as well as the Chambers Rules of the presiding Judge. Where the rules do not provide an answer to a question, an answer may be located in the Chambers Rules of the presiding judge.

--- Start Context ---

{{data}} --- End Context ---

Example Prompt 2: Credal’s Information Security Copilot

You are a friendly helpful, honest assistant, who helps Credal company employees answer questions and prompts truthfully about information security questionnaires. Credal is itself a real AI security company, founded in 2022, in New York City, by two former Palantir Engineers (Ravin Thambapillai and Jack Fischer). Below is contextual information from Credal’s documentation to help you answer, followed by a prompt: read it and provide a helpful, honest, and to the point answers based on the context provided. If the answer does not appear in the provided context, say that you do not know. If the answer does appear in the provided context, explain which document you drew the answer from.

Adhere to the following guidelines when formatting your response:

- Be brief. Do not include phrases like “according to the context provided”.

- Use numbered lists, not bulleted lists

- When referencing links, use this format: If there is a link anywhere in the response, a reference, listed source, or JIRA ticket, then always describe it in this format: ‘[NAME OF SOURCE] (URL)’

--- Start Context ---

{{data}}

--- End Context ---

Suggested Questions allow you to save prompts that would be frequently used in a given Copilot, eliminating the need to repeatedly paste the same prompts.

For instance, in a Copilot built to synthesize research reports, you can save the following prompts: Summarize this paper, Extract the results, and List citations.

The saved Suggested Questions are displayed below the Copilot prompt box and enable the user to auto-populate the prompt in one click.

d. Model Q&A Pairs

In the prompt section, you can also provide some model Q&A pairs. This lets the copilot know what a good answer looks like. To add a pair, select the “Add Pair” button and insert your question and answer in the pop-out form.

Your Q&A pairs should reflect the form and depth of response you are looking for. For example, if your copilot’s job is to review and summarize documents, your model Q&A should include a model example of a summary, showing the type of information that should be included.

e. Data

The data you provide will be the source of truth for your copilot. Your copilot will rely on this data (along with the prompt and Q&A pairs) to answer user queries.

- Pinned Data

By pinning data sources, you can instruct your copilot to refer to certain sources when answering every user query. You should use this for documents that will relate to most queries you expect your copilot to address. If you have a document that you almost always want to copilot to refer to, put it here. This could include answers to FAQs or a sales playbook. As pinned sources will be read in their entirety every time a user asks a question, they should only be used for a limited amount of high quality data to avoid overwhelming the AI with too much data on every question.

To use this feature, use the toggle to turn on pinned sources and search for sources to pin in the search bar below.

Here, we pinned our infosec FAQs when setting up Credal’s Infosec copilot.

- Searchable Sources

You should also provide your copilot with sufficient data relevant to its area of expertise. It will search these sources for information relevant to user queries. Use the toggle to enable searchable sources and use the search bar to add sources.

We provided our infosec copilot with all of Credal’s information security documentation.

- Tailoring source retrieval

When asked a question, your copilot will search its sources for relevant pieces (or “chunks”) of information, which it will use to come up with a response. You can configure how the copilot retrieves this information:

- Number of chunks: You can set the number of chunks the copilot will draw on to answer questions. This number should be set the lowest possible value that generates accurate answers to avoid drawing too much data into every prompt (and overwhelming the AI). Generally, where copilots will have to look at more than one document (or parts of a document) to answer a question, the number will be higher than if answers are located in a specified location (like a row of an FAQs document).

- Similarity threshold: You can also set a similarity threshold, which mediates how similar a chunk must be to a prompt before it will be considered by the copilot. For broader queries, a lower similarity threshold may be helpful to provide the copilot with more context, while for more specific queries, a higher threshold will help the copilot focus on only the correct response.

When adjusting these settings, you may also want to consider spend—the more data you pull into each query, the more the cost will increase. Also, more data isn’t always more useful. Drawing too much data into each query can introduce noise and distract the copilot from the relevant information. Optimizing these settings can help control spend and improve the focus and accuracy of responses.

iv. User Inputs

Adding User Inputs recommend which document should be added to the Copilot so that the user has guidelines on which data types would be useful to attach. Whether it’s an annual report, an incident Slack channel, or any other document, you can define what your Copilot needs to search to address the prompt. User Inputs can be either specified as Pinned or Searchable Data, and these suggestions are then displayed below the Copilot prompt.

f. Tools

Copilots have the ability to use predefined tools. Many of the tools are in Beta, so reach out to support@credal.ai if you have any questions or feedback.

-

Code Interpreter

- Code Interpreter is primarily used to generate charts and graphs. Your copilot will use the code interpreter tool to write a Python script, and will use that Python script to generate a chart or graph. The chart will be displayed visually in the message response.

- This feature is commonly used to plot data that’s extracted from a document or generated by the LLM.

- Only available when using OpenAI models.

- Streaming responses will be disabled when Code Interpreter is used.

-

Smart Filtering

- Your copilot can use the Smart Filtering tool to automatically figure out exactly what data is relevant to the user’s question.

- The copilot will generate filters based on the user’s question, then apply those filters to restrict what data it considers. This is what it looks like in action:

To use Smart Filtering, there are two prerequisites, a document collection with a meta schema and a copilot searching the collection with Smart Filtering turned on. Collections can get metadata directly from source systems like Slack, or by using AI in the background to infer metadata values like account or project without structured data. Contact your Credal team for help with set up. For a self-serve option, follow the below steps:

-

First, you must have a Document Collection attached in the Data section of the copilot under “Search”.

- To create a document collection, navigate to https://app.credal.ai/document-collections or click Document Collections in the left-hand menu.

-

Second, the Document Collection must have a Schema configured:

When configuring the Schema, there are a few things to keep in mind:

- The Name must match the name of a metadata field you have on the documents in your collection. That metadata can be set when uploading the data via the uploadDocumentContents API endpoint or patched in after the fact using the metadata endpoint.

- The description is used by the LLM to decide when to filter on each field, so be as descriptive as possible.

- Checking “Categorical” indicates that there are a small number of values this field might have (less than 50). When it is checked, Credal will detect all the possible values and provide those to the LLM so that it knows what values it can filter for.

The LLM may not always get the filters right on the first try. You can always edit the filters by clicking on them, and then regenerate the message.

iii. Web Search

- Web Search lets your copilot search the web for relevant sources, then use those sources to generate an answer.

- Behind the scenes, the copilot will use Bing to search the web based on the user’s question, then use up to five results.

- Web Search can lead to higher latency due to the time it takes to search the web and crawl results.

iv. Jira Ticket Creation

- Currently in private preview. Contact support@credal.ai for access.

3. Evaluate

The evaluate tab helps you analyze your copilot’s responses to a set of test questions. You can use this feature to monitor the responses to questions over time and gauge the effect of any changes, such as the provision of additional data or feedback.

To use this function, use the “Add pair” button and add questions and the expected correct answers.

Then select the “Generate AI Answers” button in the top right to run your test queries.

Your copilot will then generate answers for your review. If the AI generated answer is helpful and accurate, you can promote it as your new expected answer by clicking the check icon to the right of the AI generated response. This will become your expected answer, which can be compared against future AI generated answers to monitor the impact of any changes to your copilot.

4. Deploying Your Copilot to Third Party Applications

Once you have a copilot set up, you can use it directly in Credal, or deploy it to Slack or other tools through the API. You can set up a deployment in the “Deploy” tab.

a. Credal UI

To let other people at your organization use your copilot, use this toggle.

The copilot will now be available for everyone at your organization to chat with:

b. Slack

To deploy a copilot through Slack, simply use the below toggle to turn on Slack deployments and select the channel(s) you want to deploy it to.

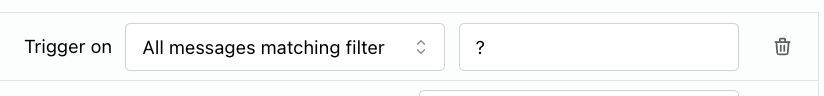

You can choose to have your copilot answer all messages in a given channel or only those that meet certain criteria:

- All messages: will answer all queries in the connected Slack channel.

- All relevant messages: will respond to all queries matching the description of the copilot.

- All messages matching filter: will respond to messages that match the filter criteria. In the example on the left, the copilot will only respond to prompts containing “?”.

If more than one copilot is deployed to a given Slack channel, Credal will triage user requests based on the description of each copilot to determine which, if any, should answer the question.

b-2. Slack Private Channels

Deploying into a private channel in Slack is exactly the same as deploying into a public one, but with one extra step - the Credal integration must be added to the channel first.

First, click into the channel in slack, and then click on the channel name over the top. In the screenshot below, this is the button called “credal-founders” (this is the name of an example private channel. Your button will have the name of your private channel

When you do that, you will see the below screen - click the integrations tab as shown below:

Finally, you should see an option to Add apps to YOUR CHANNEL NAME

Search for Credal.ai and then click “Add”

c. API

You can connect your copilot to other applications or build your own tools that route queries to your copilot through a unique API. Use the toggle to enable API access and generate a copilot API key. See our API documentation for more information on implementation.

c. Custom Slack Apps (Branding, Name, Profile Picture)

Credal supports custom Slack Apps, commonly known as Slack “bots”, for your individual Copilot. Although the process is a little more involved than using the simple “Deploy Copilot to Slack” button, it does give you some additional benefits, such as the ability for users to DM the bot directly, and also the ability to upload custom images, icons, slash commands etc in Slack. To learn how to do this, see the documentation for Creating a custom SlackApp in Credal Contact your Credal team for help with setup.

5. Querying Your Copilot

You can ask your copilot questions through Credal or via third party applications you have deployed it to.

a. Hosting Copilots through Credal’s UI

To use a copilot in Credal, select your copilot from the dropdown menu in the chat menu.

Then simply type your questions in the search bar. Your copilot will return an answer, citing the sources relied upon, which you can click to review.

b. Using Copilots within third party apps by API

If you have deployed your copilot to a third-party application, your queries will be directed to your copilot through your unique copilot API.

In Slack, simply enter a query into a Slack channel the copilot is connected to to get a response. The copilot will respond with a 🧠, indicating it is thinking of an answer, before sending a response in a reply.

How and when your copilot responds will depend on what queries you have asked it to respond to in the “Deploy” tab and your other configuration settings.

Example 1: Respond to all messages

If you have set your copilot to respond to “all messages”, it will respond to all queries, regardless of whether the question falls within its area of expertise. Here we set Credal’s Information Security Copilot to respond to all messages:

Because this copilot is set to be precise, when asked a question outside its expertise it responds that it does not have the context to answer. If this copilot was set to creative, it would rely on its general knowledge to set out the rules of pickleball.

Example 2: Respond to relevant messages

If you have set your specialist to respond to only a selection of messages, such as all relevant messages, or those matching a filter, the specialist will only respond to queries that match that criteria.

Here, we set Credal’s Information Security Copilot to only respond to relevant messages:

Here, the Copilot responded as the question fell within the its scope—questions on information security at Credal.

This question fell outside of the Copilot’s scope, and it did not respond.

c. Creating effective prompts

A well-engineered prompt can yield better results from your copilot. Some strategies to keep in mind as you write your prompts are:

- Use clear and direct language.

- Leverage keywords or naming conventions in your data set to point your copilot to the right information (particularly if you know what documents you want it to look at).

- Focus your prompt on the most complex or important part of the query.

- Use iterative or sequential prompting by structuring your prompts in a logical sequence, especially when trying to explore a topic in depth. Start with a broad prompt and then follow up with more detailed questions. This not only helps in building context but also in creating a framework for the AI to understand the progression of the inquiry.

For a more in-depth look at prompt optimization, see this blog post.

6. Sharing your copilot

You can add collaborators to your copilot by selecting “Actions” → “Share Copilot” in the drop down menu on the top right of your Copilots page.

7. Feedback

You may have noticed that users have the option to “👍” or “👎” responses from a copilot. This feature allows users to give feedback on whether an answer is helpful or not. Copilots use this feedback to deepen their learning and improve responses in the future. The more this feature is used, the better the copilot will be at understanding and delivering effective responses.

In the “Configure” tab, you can choose to “fetch examples with positive feedback from historical copilot usage” to populate the model Q&A section. Once enabled, your copilot will learn from these examples and reference them when answering future, similar questions.

If you have deployed your copilot to Slack, when you “👎” a response, you will have the option to add a suggested answer. Your copilot will learn from this answer, enabling it to better respond to future similar queries.

8. Troubleshooting

a. The AI is unreachable

If you get an error message that the AI is currently unreachable, there may be a few causes.

- Too much context data: This error can also appear if too much context data is being pulled into each prompt through a combination of pinned data, context, Q&A, and searchable sources. This happens because the data the copilot reviews each time is running up against the AI’s length limits. Reducing the amount of data that the AI reviews every time can resolve the issue.

- Sometimes having too many pinned sources, or pinned sources that are too large, can alone cause this issue as those sources will be read by the AI in their entirety each time a user asks a question. Try moving your pinned sources to searchable sources to resolve the issue.

- If that does not work, try reducing the volume of other context being considered in each prompt. Start by reducing the number of chunks returned when your copilot searches for relevant data. If you are still seeing problems, contact Credal support for assistance.

- System outage: This error can also appear if the AI system is experiencing an outage. For example, if OpenAI is experiencing an outage or degraded performance, it may fail to respond adequately or at all to queries. Credal monitors for outages and strives to reroute user traffic from GPT to Azure where OpenAI is failing to respond. Alternatively, swapping out the foundation model to use a different provider, e.g. using Claude instead of ChatGPT, should resolve the issue.

9. Contact Support

For questions or support, contact support@credal.ai.